AI as Your Therapist: Can Emotional Algorithms Really Heal Us?

The conversation around mental health has shifted dramatically in the last decade—and technology has stepped in to fill the gaps. With rising global demand for therapy and limited access to mental health professionals, AI therapy tools have emerged as a revolutionary, and sometimes controversial, solution. Apps like Woebot, Wysa, and Replika promise 24/7 emotional support powered by algorithms that simulate empathy. But can a machine truly understand pain, trauma, or loneliness?

In today’s world, millions are turning to AI chatbots not just for casual conversations but for therapeutic relief. These tools rely on natural language processing (NLP) and cognitive behavioral therapy (CBT) frameworks to detect emotional cues, suggest coping strategies, and even track users’ mental health over time. They offer affordability, accessibility, and anonymity—qualities that traditional therapy sometimes struggles to match.

However, the growing popularity of AI therapists also raises deep ethical and emotional questions. What happens when our most intimate feelings are processed by an algorithm? Can digital empathy ever replace human understanding? And perhaps most importantly—are we building a future where emotional healing depends on data-driven dialogue?

As we dive deeper into this technological evolution, we’ll explore the science, promise, and pitfalls of AI as a mental health companion—and whether emotional algorithms can truly heal the human heart.

The Rise of AI Therapy: Why People Are Turning to Machines for Healing

The Accessibility Crisis in Traditional Therapy

Mental health care remains a luxury for many. Between high costs, long waiting lists, and stigma surrounding therapy, millions go untreated. In countries where the therapist-to-patient ratio is alarmingly low, AI therapy platforms have stepped in to bridge the gap. These tools operate around the clock, offering instant responses, guided exercises, and non-judgmental listening—all through your smartphone. For many, it’s the first time they’ve had access to consistent emotional support.

The Appeal of Anonymity and Judgment-Free Spaces

One of the strongest appeals of AI therapy is anonymity. Users can open up about sensitive issues without fear of being judged, misunderstood, or exposed. For those dealing with trauma, addiction, or identity struggles, talking to an AI feels safer than talking to a human. This sense of emotional safety often encourages honesty, leading to meaningful self-reflection—even without a human therapist present.

Technology as a Democratizer of Mental Health

AI therapy represents a kind of democratization of mental health—bringing psychological tools to anyone with an internet connection. It’s particularly impactful in regions where mental health resources are scarce or social stigma prevents people from seeking traditional help. The technology doesn’t just provide conversation; it delivers education, coping techniques, and personalized mental health check-ins, making emotional wellness more accessible than ever before.

How Emotional Algorithms Work: Inside the Machine’s Mind

The Role of NLP and Emotional Intelligence in AI Therapy

At the heart of AI therapy lies natural language processing (NLP)—the same technology that allows AI systems to understand and respond to human text. Emotional algorithms are trained on massive datasets of human conversations, learning to detect tone, sentiment, and even subtle shifts in mood. By analyzing your words, an AI therapist can identify emotional distress, anxiety, or depressive patterns, responding with pre-programmed therapeutic interventions.

Cognitive Behavioral Therapy (CBT) in Code

Most AI therapy tools rely on principles of Cognitive Behavioral Therapy (CBT)—a widely used psychological framework that helps users challenge negative thought patterns. When users express sadness or stress, the chatbot might encourage reframing thoughts, suggesting exercises like gratitude journaling or deep breathing. This structured, evidence-based approach allows AI to provide consistent and replicable support without human error or fatigue.

Data, Privacy, and the Limits of Machine Empathy

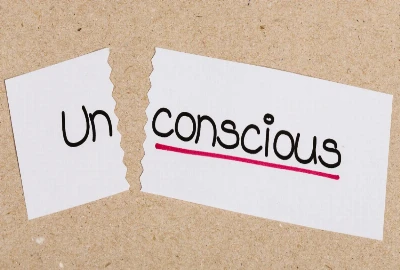

While AI can mimic empathy, it doesn’t feel it. Machines lack true emotional consciousness; their understanding of human pain is statistical, not soulful. Moreover, data privacy remains a major concern. AI therapy apps often collect sensitive information—text inputs, emotional trends, even biometric data—which could be vulnerable to misuse. The therapeutic relationship depends on trust, and in digital therapy, that trust lies not with a person, but with a system.

The Benefits and Risks of AI as a Mental Health Companion

24/7 Accessibility and Scalability

Unlike traditional therapists who work set hours, AI therapy is always available. This continuous accessibility allows users to seek help during moments of crisis, insomnia, or loneliness. For mental health providers, it also means scalability—AI can handle thousands of conversations simultaneously, offering basic support to many people who might otherwise go without help.

Affordability and Global Reach

One of AI therapy’s biggest strengths is cost efficiency. Subscription-based AI therapy apps are significantly cheaper than in-person sessions, often costing less than a single coffee per week. This affordability has made mental health support available to millions in developing regions, rural areas, and underserved populations. The global reach of AI therapy represents a major step toward mental health equity—if implemented responsibly.

The Emotional Risks of Artificial Support

However, the emotional risks of relying on AI for therapy are substantial. Over-dependence on chatbots can create false intimacy, where users mistake programmed responses for genuine human care. In extreme cases, people may withdraw from human relationships, preferring the predictability of a machine. Moreover, without proper clinical oversight, AI may fail to detect signs of severe mental illness or suicidal ideation—posing real safety risks.

The Ethical Dilemma: Can Machines Truly Heal Human Emotions?

The Illusion of Empathy

Empathy is the cornerstone of therapy—and while AI can simulate compassion, it cannot feel it. Emotional algorithms respond based on probability, not intuition. This raises a profound ethical question: is simulated empathy enough for healing? While AI can help users reflect, track patterns, and practice coping skills, it cannot replace the nuanced, unpredictable warmth of human understanding. The risk lies in mistaking emotional simulation for authentic connection.

Algorithmic Bias and Cultural Sensitivity

AI systems are trained on datasets that may not reflect the full diversity of human experiences. This creates algorithmic bias, where responses may be culturally insensitive, gender-biased, or simply inappropriate. For instance, an AI trained on Western emotional models may fail to understand grief expressions in other cultures. Ethical AI therapy must therefore prioritize inclusivity, transparency, and continuous learning to truly support a global audience.

Balancing Technology and Humanity

Ultimately, the goal should not be to replace human therapists but to augment human care. AI can serve as a valuable first step—offering emotional triage, tracking progress, and providing immediate support—while human professionals handle complex or high-risk cases. The future of therapy lies in collaboration between algorithms and empathy, where technology enhances rather than erases human connection.